In highly specialized niches—like AI-driven chip manufacturing, military applications, and advanced research—we’re already witnessing instances where AI outperforms humans in specific tasks. However, these are narrow applications, not the broad Artificial General Intelligence (AGI) that Kurzweil and others envision. Kurzweil suggests AGI could emerge around 2029, but this is far from certain—it could happen sooner or later. Even when AGI becomes feasible, widespread adoption might take another decade or more, as it will require significant advancements in funding, engineering, and infrastructure to support the immense computational demands.

As we move closer to this possibility, it’s important to distinguish between the advancements we’re currently seeing and the ultimate goal of AGI. The upcoming release of GPT-5, for instance, is a step forward, but it’s not AGI. The increased speed and capabilities of these models might give the impression that we’re nearing the singularity, but in reality, we’re still on the journey—perhaps further along, but not yet at the destination.

Today, AI continues to make rapid progress, particularly in specialized areas where it excels beyond human capabilities. However, AGI, which would have the ability to understand, learn, and apply knowledge across a broad range of tasks at or above human-level capability, remains a future goal. Kurzweil’s prediction of AGI emerging by 2029 is intriguing, but even then, the societal implementation could take years, necessitating extensive infrastructure, funding, and technological refinement.

In the nearer term, we can expect significant advancements in no-code software development platforms. These tools will enable non-technical individuals to direct AI to write and execute code, lowering the barriers to software creation. This democratization of technology is set to unleash a wave of creativity, allowing people from diverse backgrounds to bring their ideas to life without needing to understand the technical details. Imagine driving a Tesla without needing to know how the engine works—this is the shift we’re heading towards.

Alongside no-code programming, agentic AI—AI that autonomously performs tasks and makes decisions—will revolutionize industries. These technologies will automate complex workflows, streamline operations, and enhance decision-making processes. In creative fields, the combination of agentic AI and no-code tools will enable unprecedented experimentation and innovation. Together, these advancements will accelerate the pace of innovation and fundamentally transform how we work, paving the way for new opportunities and challenges as we approach the era of AGI.

Leopold Aschenbrenner’s article, Situational Awareness: The Decade Ahead, provides a compelling exploration of the potential pathways to achieving AGI and the immense computational power that might be required. It’s an essential read for anyone interested in the future of AI.

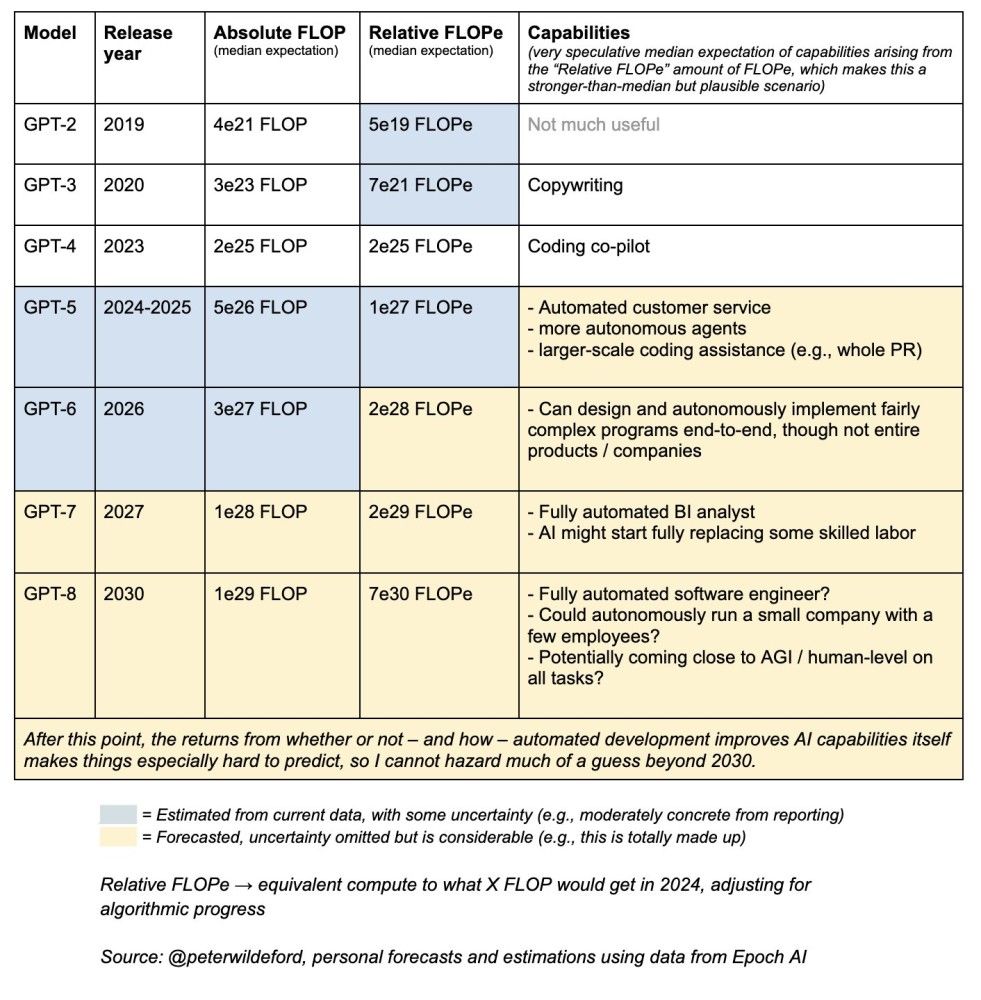

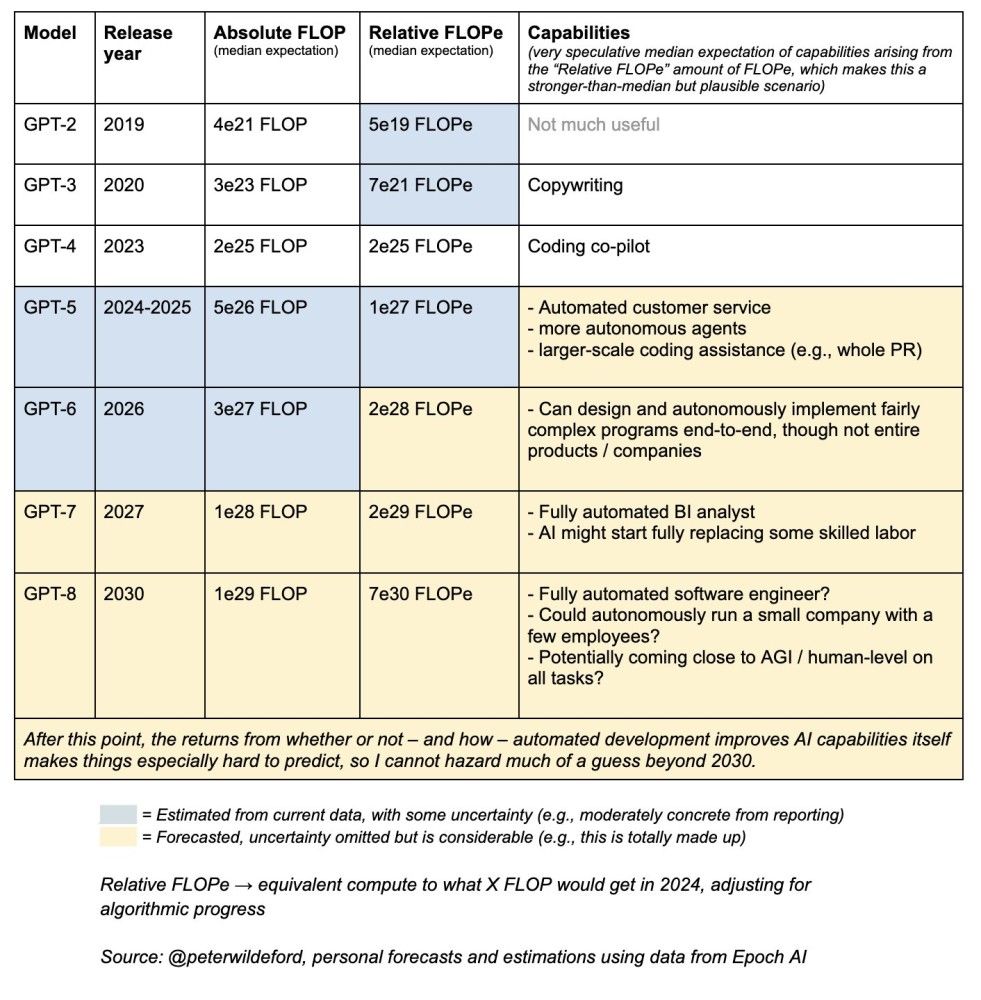

Recently, I also came across an intriguing post by Peter Wildeford, which outlines a methodical path of generational improvements in AI leading to AGI. His chart maps the projected evolution of GPT models from GPT-2 in 2019 to a speculative GPT-8 by 2030, focusing on key metrics like Absolute FLOP and Relative FLOPe—metrics that measure computational power and efficiency relative to the models’ capabilities.

Wildeford’s projections suggest the following:- Near-Term (2024-2025): GPT-5 will likely enhance automated customer service, deploy more autonomous agents, and offer large-scale coding assistance, aligning with the ongoing automation of routine tasks.

- Mid-Term (2026): GPT-6 could autonomously design and implement complex programs, marking a shift from supportive roles to more autonomous decision-making capabilities.

- Long-Term (2030): GPT-8 could function as a fully automated software engineer, potentially capable of running a small company autonomously—hinting at AI nearing AGI, with human-equivalent or superior capabilities across a broad range of tasks.

In summary, Wildeford’s projections offer a thought-provoking look at AI’s potential future. While some of these ideas are speculative, they are grounded in the realistic trends we’re observing. This data provides a valuable framework for considering how AI might evolve over the next decade, though we should approach these forecasts with flexibility and caution given the inherent uncertainties in predicting technological advances.

The development of AGI (Artificial General Intelligence) is surrounded by uncertainties, with its timeline influenced by several factors. Technological breakthroughs, such as quantum computing or new AI architectures, could accelerate progress, while global collaboration or competition might drive nations to prioritize AGI development. Economic incentives, public support, and ethical considerations could either speed up or slow down this process, depending on how society and governments respond. Additionally, unexpected global events or crises, shifts in AI research paradigms, and societal adaptation will play crucial roles in determining when and how AGI emerges. Ultimately, while the timeline is uncertain, understanding these dynamics is key to preparing for a future where AGI could significantly impact industries and society.